Neural Computations for Sensory Navigation: Mechanisms, Models, and Biomimetic Applications (2018)

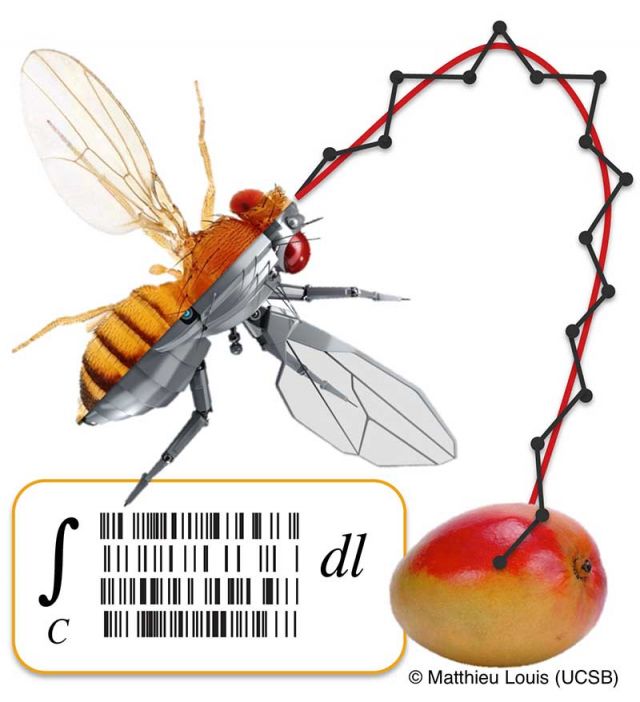

Animals use their brains to process sensory information that guides their movements in the environment. Visual, olfactory, thermal, and mechanosensory cues change with space and time. Animals prioritize different sensory information, but all solve common challenges of detecting reliable cues, parsing self-generated changes from external ones and integrating sensory inputs to control motor outputs that compose search strategies. Mapping neural circuits critical for sensory navigation in different sensory modalities and organisms informs development of models for the computations these circuits may perform. The models in turn suggest experiments to test how these circuits direct elementary behavioral responses that form robust orientation strategies.

This program brought together scientists with diverse perspectives to consider new ways to advance the field and make progress. Combining modeling and model organisms, synthetic and biological implementations of neural circuits, controlled inputs and quantified outputs required communication between experts in different disciplines, fostered by the environment of the KITP and the QBio school on “Systems Neurophysics” that ran in parallel to the program.